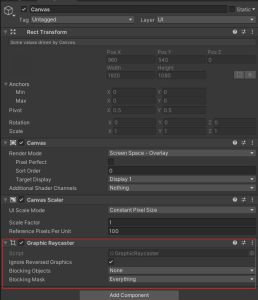

Unity中UI的射线检测由Graphic Raycaster进行管理。

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

[RequireComponent(typeof(Canvas))] public class GraphicRaycaster : BaseRaycaster { public override int sortOrderPriority { get { // We need to return the sorting order here as distance will all be 0 for overlay. if (canvas.renderMode == RenderMode.ScreenSpaceOverlay) return canvas.sortingOrder; return base.sortOrderPriority; } } public override int renderOrderPriority { get { // We need to return the sorting order here as distance will all be 0 for overlay. if (canvas.renderMode == RenderMode.ScreenSpaceOverlay) return canvas.rootCanvas.renderOrder; return base.renderOrderPriority; } } } |

从上面的代码中可以看出来GraphicRaycaster需要依赖于Canvas组件,而且其中会缓存Canvas上的排序顺序,后面这两个属性会在EventSystem中作为后续事件触发的排序依据。(当然今天我们不研究EventSystem,这里提一下就不管具体是怎么实现的了)

接下来就是GraphicRaycaster是怎么进行的射线检测:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

private static void Raycast(Canvas canvas, Camera eventCamera, Vector2 pointerPosition, IList<Graphic> foundGraphics, List<Graphic> results) { // Necessary for the event system int totalCount = foundGraphics.Count; for (int i = 0; i < totalCount; ++i) { Graphic graphic = foundGraphics[i]; // -1 means it hasn't been processed by the canvas, which means it isn't actually drawn if (!graphic.raycastTarget || graphic.canvasRenderer.cull || graphic.depth == -1) continue; if (!RectTransformUtility.RectangleContainsScreenPoint(graphic.rectTransform, pointerPosition, eventCamera)) continue; if (eventCamera != null && eventCamera.WorldToScreenPoint(graphic.rectTransform.position).z > eventCamera.farClipPlane) continue; if (graphic.Raycast(pointerPosition, eventCamera)) { s_SortedGraphics.Add(graphic); } } s_SortedGraphics.Sort((g1, g2) => g2.depth.CompareTo(g1.depth)); totalCount = s_SortedGraphics.Count; for (int i = 0; i < totalCount; ++i) results.Add(s_SortedGraphics[i]); s_SortedGraphics.Clear(); } |

其中eventCamera会根据Canvas组件的渲染模式不同而不同:

|

1 2 3 4 5 6 7 8 9 10 |

public override Camera eventCamera { get { if (canvas.renderMode == RenderMode.ScreenSpaceOverlay ||(canvas.renderMode == RenderMode.ScreenSpaceCamera && canvas.worldCamera == null)) return null; return canvas.worldCamera != null ? canvas.worldCamera : Camera.main; } } |

foundGraphics其实也是通过var canvasGraphics = GraphicRegistry.GetGraphicsForCanvas(canvas);函数,从canvas中获取下面所有的Graphic组件。

那么这个射线检测接口就很容易理解了:

- 传入参数canvas(代码里也没用到啊,传进来干啥啊?),事件相机,触发点,Graphic组件,结果List

- 遍历Graphic,剔除掉组件里没开raycastTarget的、剔除的、深度=-1的,Grapgic没在屏幕里的

- 检测触发点是否在Graphic内,如果触发成功了,就排一下序然后加到结果列表里

所以其实UI的射线检测只会检测到Graphic组件,如果你想自己定义一个可以接受UI射线检测的组件,那就写一个组件继承Graphic类就可以拉~然后还可以把顶点什么的清了,就会得到一个不会渲染但是会接收射线的组件了,比如:

|

1 2 3 4 5 6 7 8 9 |

public class NoDrawingRayCast : Graphic { public override void SetMaterialDirty() { } public override void SetVerticesDirty() { } } |

因为Graphic里定义了material和color,这里把两个SetDirty函数重写保证在修改对应的颜色和材质时不会触发重建导致组件被绘制。如果觉得还是不保准的话,可以考虑重写一下OnPopulateMesh函数,把顶点数据都清了,这样就肯定不会绘制了~